Russian scientists have developed the world's first open virtual environment for self-learning AI.

While the media often portrays the evolution of modern AI as a continuous success story, the reality is significantly more complex. Managing autonomous vehicles and UAVs remains a challenge: even the strongest players in the industry, such as Waymo and Tesla, occasionally run red lights or communicate with each other at night, despite the fact that autonomous cars, unlike those driven by humans, hardly respond to auditory signals.

Such incidents are not coincidental; they point to one of the bottlenecks of contemporary AI: it performs well in situations for which it has been "trained," using a large dataset, but often falters when faced with rare and complex challenges that are only weakly (or not at all) represented in its training set.

Of course, researchers are attempting to address these phenomena. One of the methods is contextual reinforcement learning (In-context Reinforcement Learning, or In-Context RL). This relatively new approach in AI allows the model to quickly adapt to new tasks based on prompts and context, without the need for extensive training from scratch.

As a result, AI can effectively interact with even very complex environments and continue to learn on the fly. In-Context RL is considered promising in areas such as personalized recommendations for online shoppers, robot management, and autonomous vehicles. In other words, it is most in demand where nearly instantaneous adaptation to fundamentally new conditions is required.

However, training such AI requires a specialized virtual environment, a sort of digital testing ground. Existing environments of this kind can be divided into two categories. Some are well-developed, like Google DeepMind, but are corporate, meaning they are closed to external users. The second option is that they are open but relatively simple, offering only homogeneous and easy tasks for AI retraining. Achieving significant progress on these is challenging. Therefore, the T-Bank AI Research lab decided to create its own open virtual environment.

“We entered the field of contextual reinforcement learning when it was still in its infancy, so we found no suitable tools for evaluating new ideas. It became clear that this was a problem for many specialists, and thus it needed to be solved among the first. This is how XLand-MiniGrid came to be,” noted scientist Vyacheslav Siniy from the AI Alignment research group within the T-Bank AI Research lab.

A scientific paper about the new virtual environment has been accepted at the largest international AI conference — NeurIPS 2024. It will be presented there from December 10 to 15 in Vancouver, Canada. However, even before this, the environment has already been utilized in a number of studies by researchers from major foreign centers developing artificial intelligence.

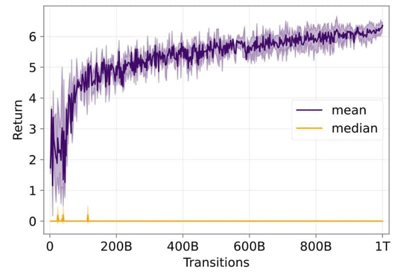

The new environment was developed using JAX — a technology for building high-performance programs. Therefore, unlike slower open-source alternatives, XLand-MiniGrid performs billions of operations per second.

Additionally, it contains 100 billion examples of AI actions across 30,000 tasks. This allows developers to utilize ready-made datasets for training instead of collecting them from scratch each time. Such features of the virtual environment for AI training simplify research and new discoveries in this field.

Moreover, unlike existing high-complexity environments, XLand-MiniGrid is publicly accessible and available on GitHub.